Everything you need to know about WordPress Robots.txt

Today we will talk about a powerful SEO tool that can make your relationship reliable with the search engine. In this guide, you will get all the information about robots.txt and its relation with webmasters, search engines, and SEO.

Table of Contents

What is WordPress robots.txt?

WordPress Robots.txt is a file in which the sitemap of your Website is written, and it is submitted in the search console. Thus the crawlers from search engines get to know what pages they have to index and which of them are not allowed to be crawled.

By providing this information to the “robots” of search engines, we can allow or restrict them to the pages of our WordPress website.

How to use it?

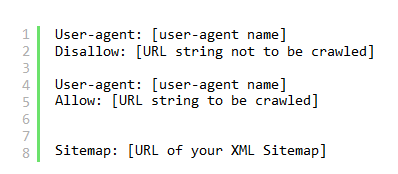

It is a text file in the root directory of your Website hosting. You have to put the instructions in the form of a code, and you are good to go. Robots.txt has the following format.

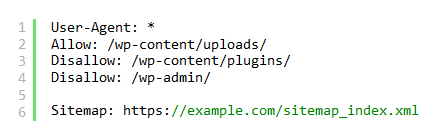

No need to panic; you should not be a coder to edit this format. In the user-agent name, you can put any name. In the Disallow line, you have to put the folders or pages that you don’t want to be crawled. In the Allow line, you have to put the pages; you want to be crawled by the search engines. After editing a WordPress robots.txt file will look like this.

Put your Website URL in the “example.com”, that is your sitemap. In the above code, you have allowed the uploads in the WordPress content folder, and disallowed the plugins and admin folder.

How to write Robots.txt file?

Now, you are well aware of the robots.txt file, let’s talk about the format of the file and how to edit or write the file. First of all, you mention the user-agent, and then you assign the permissions for that user-agent. But a User-agent is?

User-agent: well, this is not an agent, but it is something that the bots used to name themselves. If you want the only bing to crawl your Website, you should use bingbot in the user-agent line.

Allow: All the files on your Website server are already allowed for the crawlers. Thus Robots.txt is actually used for restricting the specific files. But Allow command can be used in a case when you want a sub-folder of the main folder to be crawled but don’t want to allow the crawling of the main folder. In that case, you should disallow the main folder and Allow the subfolder.

Disallow: this command is used to tell robots not to access unnecessary folders and files.

Sitemap: This is a map for your site; in short, it gives the crawler a plan to crawl the Website. Thus your Website gets indexed more quickly in the way you want.

Where to put robots.txt?

You have to write a new text file, and after adding the above commands, you have to put the file in the root directory of your Website.

- After accessing the cPanel,

- Go to the File manager, and then

- Add the text file in the /public.html folder.

Using an FTP client.

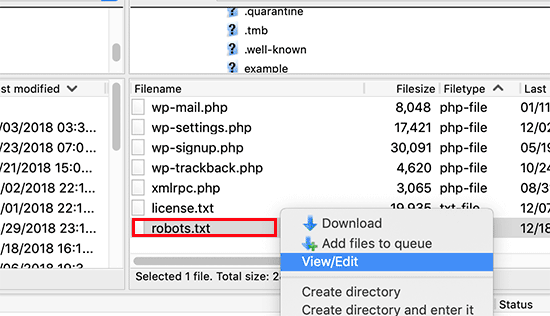

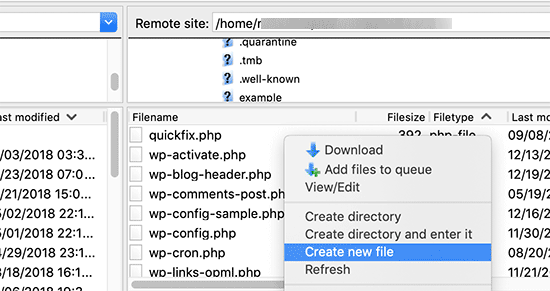

If you use to upload files on your Website using an FTP client, then this method is for you. Access your WordPress account using the FTP client and see the root directory of your WordPress website; you will see a robots.txt file there.

- Right-click on that and select view/edit to edit that.

- If there is no robots.txt file there, then you have to create one, because you haven’t created any yet.

How to write or edit WordPress robots.txt?

You can also write it in the same way as above. But there are several plugins that you can use to edit or write the robots.txt from your WordPress dashboard.

Using Yoast SEO Plugin.

You can easily create or edit the robots.txt file in WordPress. Here are the easy steps to create a new robots.txt file. You can use the same method to modify the already created file.

- In your WordPress dashboard, select the Yoast plugin and select

- On the next page, select the File editor.

- On the next page, select the option “Create Robot.txt file.”

- Now you can create or edit the file as shown below.

Don’t get confused between Crawling and indexing.

Well, crawling is done by the bots to index the site. But the pages you disallow for a bot to crawl need to get indexes in the webmasters. Google explains that if you disallow some pages in your robots.txt file, they might get index in a way or the other.

For example, if any other website or web page is providing the link to that page that is disallowed by you, then most probably, that page will get indexed.

Why should I care about WordPress Robots.txt

There are a few reasons for that but worth it. Let’s see what will happen if you do not make a robots.txt file. Well, bots will still do the crawling, but that will not be in management. These are the things that will happen when you do not make a robots.txt file.

- Crawlers have an individual quota for every site, so when they have to crawl your whole site, on their quota limit, they will go away without crawling the entire place. And crawl the remaining in the next visit. This will slow down the indexing process.

- Crawlers will use a lot of your server resources when they have to crawl the whole site. You can limit that by using the disallow command in the robots.txt.

So, by adding robots.txt in your Website, you can boost your SEO and can save a lot of bandwidth also.

Testing robots.txt file.

There are several tools and plugins and tools available to check the robots.txt file, but the best method is to check through the Google search console. Because, you are doing that all for Google, right!

Follow the steps to get started.

- Open the Google txt tester tool.

- Select the Website for which you want to check the robots.txt file. Or add a new property.

- On the next tab, you will see your robots.txt file. And if there is an error, you can find that too on that page.

What if something goes wrong?

In the whole process, if anything goes wrong or if you don’t want to do that on your own, you can directly contact any WordPress maintenance agency.

Conclusion:

The primary purpose of adding the robots.txt file is to optimize the crawling for your site, and in this way, you can prevent the bots for crawling specific folders, thus save your server’s resources and time. But if in any case, you are stuck in any of the WordPress problem, you can contact WPinCare to help you out. You can get best deals for website maintainance and security.

We hope this article will defiantly help you to get the real purpose and method for adding robots.txt file