5 Things Every Web Developer Should Know About SEO

When you try to understand for the first time how the optimization of web pages or SEO works, it is difficult to know where to start. There is much to digest. And just when you think you “know” something, Google releases another algorithm update that changes things in you.

But if you are a web developer, there is good news: Understanding some basic things will help you encode pages that perform well when Google crawls them.

To achieve that goal, keep these SEO tips for developers in mind while working on your website.

Table of Contents

Understand the Search Process

The first thing that a web developer needs to keep in mind is the relationship between web pages and search engines.

Most people think that the Internet is a bridge that people walk to reach their destination.

In fact, it is like a restaurant without a menu. When a user performs a search, he writes his order and gives it to the waiter (the search engine). Then, the waiter searches for the most suitable chef (or website) to complete the order.

This is what happens every time a user performs a search. The engine goes from here to there between the user and the page. There is no solid connection between the two, just constant exchanges.

The developer’s job is to do everything possible to ensure that the search engine can accurately test whether the page will meet the user’s search.

Learn to Write a Good URL

The first step to make a page easy to find is to write an appropriate URL .

The URL is the first thing that search engines see and also gives users the first impression of the page. How a search engine reads the address of a web page is a determining factor in the effectiveness of SEO.

An error in the URL can affect your ranking (seriously).

A URL has eight parts:

- The protocol represents the set of rules that a browser and the webserver use to communicate with each other. This is the http: // part of the URL. The search engine automatically adds this part to the URL so you can start the search.

- The root domain is the dominant structure where everything else appears in the URL. Hence the name. Often, this is the name of the website.

- A subdomain is a subdivision of the root domain. The best examples of subdomains are when the web pages have different locations for different languages and regions. If the URL were a building, the subdomains would be different floors.

- The top level domain (TLD) is the highest in the hierarchy of the system of the Internet domain name. This is the last label of a fully qualified domain name. The most common TLD is .com.

- The subfolders are the first divisions in the content of a web page. They allow developers to organize the page to make it easier to navigate for users and browsers.

- A page is what a user is really looking for, at least according to the search engine. This is the title of a specific web page. The names of your pages should be user-friendly as much as possible to improve rankings – (User-Friendly webpage).

- The parameters optional allow developers to control which pages Google can track on a webpage. This is useful when a site needs multiple pages for related content while avoiding penalties for duplicate content.

- Adding an anchor names allows search engines to focus on a specific part of the page. This is a useful SEO device because it is like a giant neon sign in the URL that says “This is what you are looking for!” Users appreciate pages with anchor names since they don’t have to scroll through blocks of information They don’t need

In order to maximize the potential of the page, write the URL in this order:

Protocol> Subdomain> Root domain> Top level domain> Subfolders> Page> Parameter> Anchor name.

For Example – https> www.doffitt.com> category > electronics> 11-things-consider-buying-one-laser-printer

Writing a URL in this way organizes the content and preserves the domain authority.

Focus on Your Meta Tags

There is much debate about whether bad coding has an effect on search engine rankings.

This is the short answer: Yes it does.

Most developers do worry that the code will damage their page load time and cause problems of use on the site. Although this can damage your conversion rate and increase your bounce rate, a badly coded page can also have a profound effect on the ranking potential of any page.

In some cases, coding errors can reject search engines when they try to read your page, which is bad wherever you look. Search engines cannot classify what they cannot even understand.

Developers should also be aware of the importance of meta tags.

Search engines want to give users the best online experience and that means serving unique content. Search engines use meta tags to help determine which pages are most relevant. Thus, developers must play with these rules and keep the meta tags interesting and compact.

Often, developers do not create the content of meta tags, but still, need to understand how they work.

Meta tags are the title and description tags. When a user does a search, the content of these two tags is the first thing users see in the search results.

As a developer, one way to damage the ranking of your page is to accidentally create duplicate meta tags. In general, search engines don’t like duplicate content, so avoid this.

In addition, it is important that you keep the labels short. Remember, it is a title and a description, not a novel. You must limit the number of characters in the title tag to 60 characters at most, and the description must be within 160 characters.

If you end up writing the content yourself for some meta tags, be sure to place the important words near the beginning of the title and description. Make each introduction as unique as possible.

Give Importance to Your Redirects

Developers are often asked to move content around the page and that is where redirects come into play.

Redirects are tools that allow developers to divert a user from an old URL to a newly created page.

There are five basic types of redirects:

- 300 – multiple options

- 301 – permanent movement

- 302 – temporary movement

- 303 – alternate redirection for temporary movement

- 307 – new redirection for temporary movement

Among these options, the most important redirects are 301 and 302.

You must use a 301 redirect when:

- Unsubscribe a page

- Move a whole page or site somewhere else

- Direct users to the original page when you delete duplicate content

You must use a 302 redirect when:

- A page is temporarily unavailable

- You want to experience moving to a new domain without damaging history and rankings

- You need to send users to a temporary page while the old one is renewed

Some developers claim that 302 is unnecessary due to 303 and 307 redirects. These redirects can perform the same functions as 302 with specific effects: The 303 forces the browser to make a GET request, even if the search engine originally made a POST request, while 307 provided the browser with a new URL for a GET or POST request.

There is only one problem: Nobody cares about GET and POST requests, so you shouldn’t mind either.

Stick to redirect 302 for temporary movements.

Avoid these common redirection errors

It is common to use 302 redirects when we move a page permanently, but it is a mistake to do so.

Using a 302 redirect in a permanent move is bad because the link juice is not transferred at 302. The link juice is the extra authority that a page gains when external sites place a link to that page. Because the 302 is supposed to be temporary, the search engines transfer the link juice to the new page.

Developers are guilty of making this mistake since users never notice it. If a developer uses 301 or 302, in both cases users are redirected. However, search engines know the difference. This ruins the potential of any type of ranking and can cause severe traffic drops.

Another frequent mistake is to redirect all the pages of an old site to the home page of a new site. This not only frustrates users who have to explore the site for a specific page but also deprives existing pages of their link juice.

Your page can lose a lot of traffic if you do this because you will hide “long tail” pages or pages of a site that satisfy highly specific searches.

Maximize Trackers Access

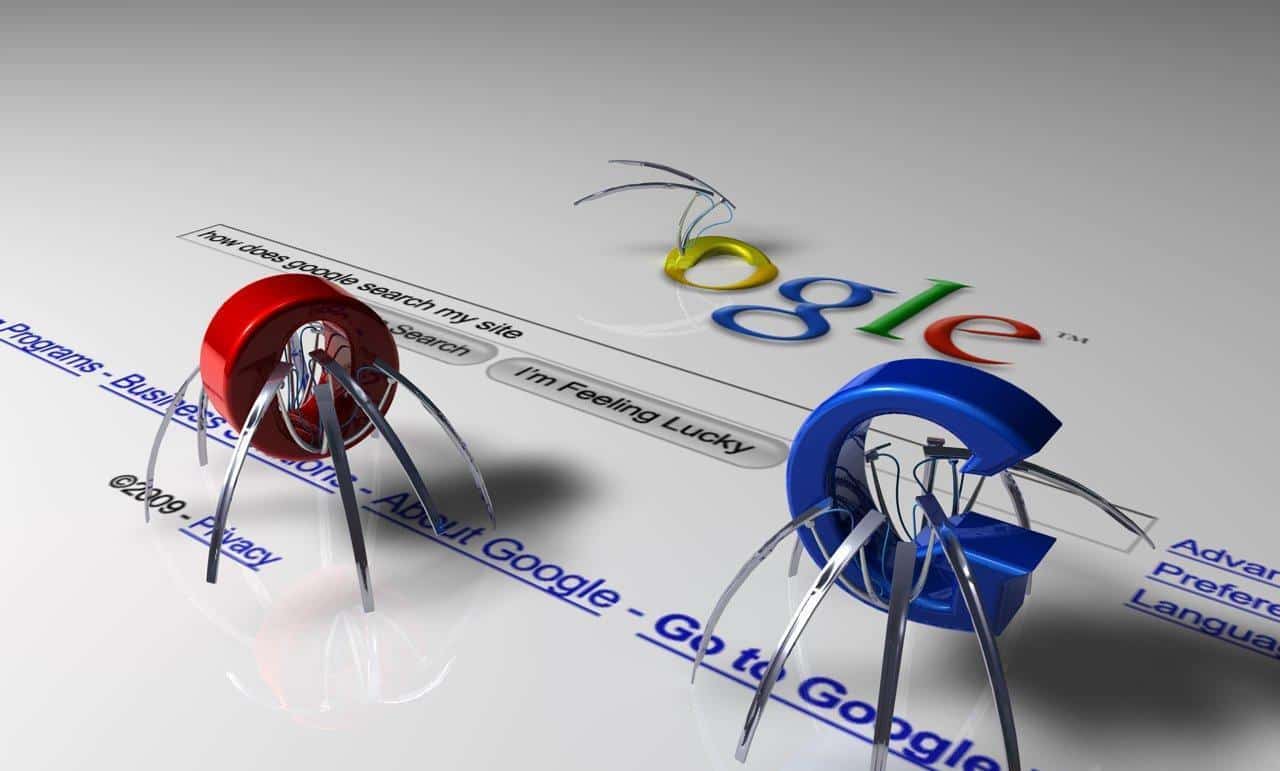

Search engines use “bots” or “spiders” that track established pages and search for useful content.

Frequently, crawler access is an underrated part of SEO because it is complicated to implement and its effects are hard to see. Search engines like people to think they have all the information registered on the internet. However, even mega businesses have limited resources, they need to be selective about which pages they should index.

Developers can use this for their benefit and have search engines crawl pages that matter most to them.

The best way to do this is to provide a solid architecture to the page. Work with your SEO experts to build your page to ensure crawlers can find what’s important every time they visit your page.

Conclusion

Although SEO may seem intimidating, it is not as difficult as it seems.

It is true that there are several variables involved in obtaining good rankings in search engines, but having in mind the five points mentioned will give you a solid advantage.

We recommend you read our SEO Guide for Beginners, it is an excellent starting point for web developers.